The realm of Software Patents is often considered to be a fairly new field which isn’t really influenced by anything else that goes on in the legal lansdcape. In particular there’s a very old field of patent law called exhaustion which had, up until a few years ago, never been applied to software patents. This lack of application means that exhaustion is rarely raised as a defence against infringement and thus it is regarded as an untested strategy. Van Lindberg recently did a FOSDEM presentation containing interesting ideas about how exhaustion might apply to software patents in the light of recent court decisions. The intriguing possibility this offers us is that we may be close to an enforceable court decision (at least in the US) that would render all patents in open source owned by community members exhausted and thus unenforceable. The purpose of this blog post is to explain the current landscape and how we might be able to get the necessary missing court decisions to make this hope a reality.

What is Patent Exhaustion?

Patent law is ancient, going back to Greece in around 500BC. However, every legal system has been concerned that patent holders, being an effective monopoly with the legal right to exclude others, did not abuse that monopoly position. This lead to the concept that if you used your monopoly power to profit, you should only be able to do it once for the same item so that absolute property rights couldn’t be clouded by patents. This leads to something called the exhaustion doctrine: so if Alice holds a patent on some item which she sells to Bob and Bob later sells the same item to Charlie, Alice can’t force Bob or Charlie to give her a part of their sale proceeds in exchange for her allowing Charlie to practise the patent on the item. The patent rights are said to be exhausted with the sale from Alice to Bob, so there are no patent rights left to enforce on Charlie. The exhaustion doctrine has since been expanded to any authorized transfer, even if no money changes hands (so if Alice simply gave Bob the item instead of selling it, the patent still exhausts at that transaction and Bob is still free to give or sell the item to Charlie without interference from Alice).

Of course, modern US patent rights have been around now for two centuries and in that time manufacturers have tried many ingenious schemes to get around the exhaustion doctrine profitably, all of which have so far failed in the courts, leading to quite a wealth of case law on the subject. The most interesting recent example (Lexmark v Impression) was over whether a patent holder could use their patent power to enforce any onward conditions at all for which the US Supreme Court came to the conclusive finding: they can’t and goes on to say that all patent rights in the item terminate in the first authorized transfer. That doesn’t mean no post sale conditions can be imposed, they can by contract or licence or other means, it just means post sale conditions can’t be enforced by patent actions. This is the bind for Lexmark: their sales contracts did specify that empty cartridges couldn’t be resold, so their customers violated that contract by selling the cartridges to Impression to refill and resell. However, that contract was between Lexmark and the customer not Lexmark and Impression, so absent patent remedies Lexmark has no contractual case against Impression, only against its own customers.

Can Exhaustion apply if Software isn’t actually sold?

The exhaustion doctrine actually has an almost identical equivalent for copyright called the First Sale doctrine. Back when software was being commercialized, no software distributor liked the idea that copyright in software exhausts after it is sold, so the idea of licensing instead of selling software was born, which is why you always get that end user licence agreement for software you think you bought. However, this makes all software (including open source) a very tricky for patent exhaustion because there’s no first sale to exhaust the rights.

The idea that Exhaustion didn’t have to involve an exchange of something (so became authorized transfer instead of first sale) in US law is comparatively recent, dating to a 2013 decision LifeScan v Shasta where one point won on appeal was that giving away devices did exhaust the patent. The idea that authorized transfer could extend to software downloads really dates to Cascades v Samsung in 2014.

The bottom line is that exhaustion does apply to software and downloading is an authorized transfer within the meaning of the Exhaustion Doctrine.

The Implications of Lexmark v Impression for Open Source

The precedent for Open Source is quite clear: Patents cannot be used to impose onward conditions that the copyright licence doesn’t. For instance the Open Air Interface 5G alliance public licence attempts just such a restriction in clause 3 “Grant of Patent License” where it tries to restrict the grant to being only if you use the source for “study and research” otherwise you need an additional patent licence from OAI. Lexmark v Impressions makes that clause invalid in the licence: once you obtain open source under the OAI licence, the OAI patents exhaust at that point and there are no onward patent rights left to enforce. This means that source distributed under OAI can be reused under the terms of the copyright licence (which is permissive) without any fear of patent restrictions. Now OAI can still amend its copyright licence to impose the field of use restrictions and enforce them via copyright means, it just can’t use patents to do so.

FRAND and Open Source

There have recently been several attempts to claim that FRAND patent enforcement and Open Source licensing can be compatible, or more specifically a FRAND patent pool holder like a Standards Development Organization can both produce an Open Source reference implementation and still collect patent Royalties. This looks to be wrong, however; the Supreme Court decision is clear: once a FRAND Patent pool holder distributes any code, that distribution is an authorized transfer within the meaning of the first sale doctrine and all FRAND pool patents exhaust at that point. The only way to enforce the FRAND royalty payments after this would be in the copyright licence of the code and obviously such a copyright licence, while legal, would not be remotely an Open Source licence.

Exhausting Patents By Distribution

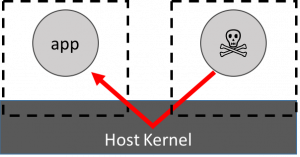

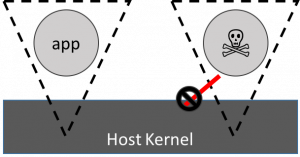

The next question to address is could patents become exhausted simply because the holder distributed Open Source code in any form? As I said before, there is actually a case on point for this as well: Cascades v Samsung. In this case, Cascades tried to sue Samsung for violating a patent on the Dalvik JIT engine in AOSP. Cascades claimed they had licensed the patent to Google for a payment only for use in Google products. Samsung claimed exhaustion because Cascades had licensed the patent to Google and Samsung downloaded AOSP from Google. The court agreed with this and dismissed the infringement action. Case closed, right? Not so fast: it turns out Cascades raised a rather silly defence to Samsung’s claim of exhaustion, namely that the authorized transfer under the exhaustion doctrine didn’t happen until Samsung did the download from Google, so they were still entitled to enforce the Google products only restriction. As I said in the beginning courts have centuries of history with manufacturers trying to get around the exhaustion doctrine and this one crashed and burned just like all the others. However, the question remains: if Cascades had raised a better defence to the exhaustion claim, would they have prevailed?

The defence Cascades could have raised is that Samsung didn’t just download code from Google, they also copied the code they downloaded and those copies should be covered under the patent right to exclude manufacture, which didn’t exhaust with the download. To illustrate this in the Alice, Bob, Charlie chain: Alice sells an item to Bob and thus exhausts the patent so Bob can sell it on to Charlie unencumbered. However that exhaustion does not give either Bob or Charlie the right to manufacture a new copy of the item and sell it to Denise because exhaustion only applies to the same item Alice sold, not to a newly manufactured copy of that item.

The copy as new manufacture defence still seems rather vulnerable on two grounds: first because Samsung could download any number of exhausted copies from Google, so what’s the difference between them downloading ten copies and them downloading one copy and then copying it themselves nine times. Secondly, and more importantly, Cascades already had a remedy in copyright law: their patent licence to Google could have required that the AOSP copyright licence be amended not to allow copying of the source code by non-Google entities except on payment of royalties to Cascades. The fact that Cascades did not avail themselves of this remedy at the time means they’re barred from reclaiming it now via patent action.

The bottom line is that distribution exhausts all patents reading on the code you distribute is a very reasonable defence to maintain in a patent infringement lawsuit and it’s one we should be using much more often.

Exhaustion by Contribution

This is much more controversial and currently has no supporting case law. The idea is that Distribution can occur even with only incremental updates on the existing base (git pull to update code, say), so if delta updates constitute an authorized transfer under the exhaustion doctrine, then so must a patch based contribution, being a delta update from a contributor to the project, be an authorized transfer. In which case all patents which read on the project at the time of contribution must also exhaust when the contribution is made.

Even if the above doesn’t fly, it’s undeniable most contributions today are made by cloning a git tree and republishing it plus your own updates (essentially a github fork) which makes you a bona fide distributor of the whole project because it can all be downloaded from your cloned tree. Thus I think it’s reasonable to hold that all patents owned by distributors and contributors in an open source project have exhausted in that project. In other words all the arguments about the scope and extent of patent grants and patent capture in open source licences is entirely unnecessary.

Therefore, all active participants in an Open Source community ipso facto exhaust any patents on the community code as that code is redistributed.

Implications for Proprietary Software

Firstly, it’s important to note that the exhaustion arguments above have no impact on the patentability of software or the validity of software patents in general, just on their enforcement. Secondly, exhaustion is triggered by the unencumbered right to redistribute which is present in all Open Source licences. However, proprietary software doesn’t come with a right to redistribute in the copyright licence, meaning exhaustion likely doesn’t trigger for them. Thus the exhaustion arguments above have no real impact on the ability to enforce software patents in proprietary code except that one possible defence that could be raised is that the code practising the patent in the proprietary software was, in fact, legitimately obtained from an open source project under a permissive licence and thus the patent has exhausted. The solution, obviously, is that if you worry about enforceability of patents in proprietary software, always use a copyleft licence for your open source.

What about the Patent Troll Problem?

Trolls, by their nature, are not IP producing entities, thus they are not ecosystem participants. Therefore trolls, being outside the community, can pursue infringement cases unburdened by exhaustion problems. In theory, this is partially true but Trolls don’t produce anything, therefore they have to acquire their patents from someone who does. That means that if the producer from whom the troll acquired the patent was active in the community, the patent has still likely exhausted. Since the life of a patent is roughly 20 years and mass adoption of open source throughout the software industry is only really 10 years old1 there still may exist patents owned by Trolls that came from corporations before they began to be Open Source players and thus might not be exhausted.

The hope this offers for the Troll problem is that in 10 years time, all these unexhausted patents will have expired and thanks to the onward and upward adoption of open source there really will be no place for Trolls to acquire unexhausted patents to use against the software industry, so the Troll threat is time limited.

A Call to Arms: Realising the Elimination of Patents in Open Source

Your mission, should you choose to be part of this project, is to help advance the legal doctrines on patent exhaustion. In particular, if the company you work for is sued for patent infringement in any Open Source project, even by a troll, suggest they look into asserting an exhaustion based defence. Even if your company isn’t currently under threat of litigation, simply raising awareness of the option of exhaustion can help enormously.

The first case an exhaustion defence could potentially be tried is this one: Sequoia Technology is asserting a patent against LVM in the Linux kernel. However it turns out that patent 6,718,436 is actually assigned to ETRI, who merely licensed it to Sequoia for the purposes of litigation. ETRI, by the way, is a Linux Foundation member but, more importantly, in 2007 ETRI launched their own distribution of Linux called Booyo which would appear to be evidence that their own actions as a distributor of the Linux Kernel have exhaused patent 6,718,436 in Linux long before they ever licensed it to Sequoia.

If we get this right, in 10 years the Patent threat in Open Source could be history, which would be a nice little legacy to leave our children.