Usually container related stuff goes on $EMPLOYER blog, but this time, I had a container need for my hobbies. the problem: how to build and test efitools for arm and aarch64 while not possessing any physical hardware. The solution is to build an architecture emulation container using qemu and mount namespaces such that when its entered you find yourself in your home directory but with the rest of Linux running natively (well emulated natively via qemu) as a new architecture. Binary emulation in Linux is nothing new: the binfmt_misc kernel module does it, and can execute anything provided you’ve told it what header to expect and how to do the execution. Most distributions come with a qemu-linux-user package which will usually install the necessary binary emulators via qemu to run non-native binaries. However, there’s a problem here: the installed binary emulator usually runs as /usr/bin/qemu-${arch}, so if you’re running a full operating system container, you can’t install any package that would overwrite that. Unfortunately for me, the openSUSE Build Service package osc requires qemu-linux-user and would cause the overwrite of the emulator and the failure of the container. The solution to this was to bind mount the required emulator into the / directory, where it wouldn’t be overwritten and to adjust the binfmt_misc paths accordingly.

Aside about binfmt_misc

The documentation for this only properly seems to exist in the kernel Documentation directory as binfmt_misc.txt. However, very roughly, the format is

:name:type:offset:magic:mask:interpreter:flags

name is just a handle which will appear in /proc/sys/fs/binfmt_misc, type is M for magic or E for extension (Magic means recognise the type by the binary header, the usual UNIX way and E means recognise the type by the file extension, the Windows way). offset is where in the file to find the magic header to recognise; magic and mask are the mask to and the binary string with and the magic to find once the masking is done. Interpreter is the name of the interpreter to execute and flags tells binfmt_misc how to execute the interpreter. For qemu, the flags always need to be OC meaning open the binary and generate credentials based on it (this can be seen as a security problem because the interpreter will execute with the same user and permissions as the binary, so you have to trust it).

If you’re on a systemd system, you can put all the above into /etc/binfmt.d/file.conf and systemd will feed it to binfmt_misc on boot. Here’s an example of the aarch64 emulation file I use.

Bootstrapping

To bring up a minimal environment that’s fully native, you need to bootstrap it by installing just enough binaries using your native system before you can enter the container. At a minimum, this is enough shared libraries and binaries to run the shell. If you’re on a debian system, you probably already know how to use debootstrap to do this, but if you’re on openSUSE, like me, this is a much harder proposition because persuading zypper to install non native binaries isn’t easy. The first thing you need to know is that you need to install an architectural override for libzypp in the file pointed to by the ZYPP_CONF environment variable. Here’s an example of a susebootstrap shell script that will install enough of the architecture to run zypper (so you can install all the packages you actually need). Just run it as (note, you must have the qemu-<arch> binary installed because the installer will try to run pre and post scripts which may fail if they’re binary unless the emulation is working):

susebootstrap --arch <arch> <location>

And the bootstrap image will be build at <location> (I usually choose somewhere in my home directory, but you can use /var/tmp or anywhere else in your filesystem tree). Note this script must be run as root because zypper can’t change ownership of files otherwise. Now you are ready to start the architecture emulation container with <location> as the root.

Building an Architecture Emulation Container

All you really need now is a mount namespace with <location> as the real root and all the necessary Linux filesystems like /sys and /proc mounted. Additionally, you usually want /home and I also mount /var/tmp so there’s a standard location for all my obs build directories. Building a mount namespace is easy: simply unshare –mount and then bind mount everything you need. Finally you use pivot_root to swap the new and old roots and unmount -l the old root (-l is necessary because the mount point is in-use outside the mount namespace as your real root, so you just need it unbinding, you don’t need to wait until no-one is using it).

All of this is easily scripted and I created this script to perform these actions. As a final act, the script binds the process and creates an entry link in /run/build-container/<arch>. This is the command line I used for the example below:

build-container --arch arm --location /home/jejb/tmp/arm

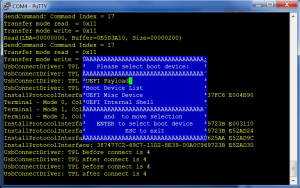

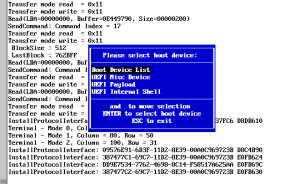

Now entering the build container is easy (you still have to enter the namespace as root, but you can exec su – <user> to become whatever your non-root user is).

jejb@jarvis:~> sudo -s jarvis:/home/jejb # uname -m x86_64 jarvis:/home/jejb # nsenter --mount=/run/build-container/arm jarvis:/ # uname -m armv7l jarvis:/ # exec su - jejb jejb@jarvis:~> uname -m armv7l jejb@jarvis:~> pwd /home/jejb

And there you are, all ready to build binaries and run them on an armv7 system.

Aside about systemd and Shared Subtrees

On a normal linux system, you wouldn’t need to worry about any of this, but if you’re running systemd, you do, because systemd has some very inimical properties (to mount namespaces) you need to be aware of.

In Linux, a bind mount creates a subtree. Because you can bind mount from practically anywhere to anywhere, you can have many such subtrees that are substantially related. The default way to create subtrees is “private” this means that even if the subtrees are effectively the same set of files, a mount operation on one isn’t seen by any of the others. This is great, because it’s precisely what you want for containers. However, if a subtree is set to shared (with the mount –make-shared command) then all mount and unmount operations a propagated to every shared copy. The reason this matters for systemd is because systemd at start of day sets every mount point in the system to shared. Unless you re-privatise the bind mounts as you create the architecture emulation container, you’ll notice some very weird effects. Firstly, because pivot_root won’t pivot to a shared subtree, that call will fail but secondly, you’ll notice that when you umount -l /old-root it will propagate to the real root and unmount everything (like your root /proc /dev and /sys) effectively rendering your system unusable. the mount –make-rprivate /old-root recursively descends the /old-root and sets all the mounts to private so the umount -l simply detached the /old-root instead of propagating all the umount events.